Feature enhancements & A/B testing

SHAPE’s Feature enhancements & A/B testing service improves products through experimentation by designing controlled experiments, shipping variants safely, and turning results into confident product decisions. Learn how A/B testing works, when to use it, and a practical step-by-step process to run experiments that compound outcomes.

Feature enhancements & A/B testing

Improving products through experimentation is how SHAPE helps teams ship smarter: we design, run, and analyze feature enhancements & A/B testing so you can increase conversion, retention, and revenue with evidence—not opinions. Whether you’re optimizing onboarding, pricing, search, or new feature adoption, we turn product changes into measurable experiments with clear decision criteria.

Talk to SHAPE about feature enhancements & A/B testing

Feature enhancements & A/B testing make product decisions defensible by improving products through experimentation.

Header navigation (service context)

Teams usually start feature enhancements & A/B testing for one reason: they need to improve products through experimentation without guessing. This page explains what A/B testing is in practice, how to run experiments responsibly, and how to translate outcomes into scalable feature enhancements.

What is A/B testing (and why it belongs in feature enhancements)?

A/B testing (also called split testing) is a controlled experiment where users are randomly assigned to different versions of a product experience—typically a control (A) and a variant (B)—and outcomes are compared using predefined metrics. In SHAPE engagements, A/B testing is a core method for feature enhancements & A/B testing, because it’s the most reliable way to attribute outcome changes to a specific product change.

What A/B testing does (in plain terms)

with clear hypotheses, disciplined measurement, and safe rollouts.

How A/B testing works in modern products

In digital products, A/B tests commonly run through a feature flag or experimentation platform that assigns users to variants and logs outcomes. The core requirement is the same across stacks: assignment must be random, consistent (sticky per user/session when appropriate), and measurable.

Common experiment unit choices

Common outcomes for improving products through experimentation

Note: Good feature enhancements & A/B testing also tracks guardrails (latency, error rate, refund rate, complaint rate) so a “win” doesn’t create hidden damage.

Why feature enhancements & A/B testing improve outcomes

Shipping improvements without measurement often creates a false sense of progress. Feature enhancements & A/B testing make progress real by tying change to outcomes—improving products through experimentation while preserving trust and stability.

Benefits you can measure

Common failure modes we prevent

Experiment design essentials (what makes results trustworthy)

1) Hypothesis-first design

Every experiment should start with a single sentence: If we change X for audience Y, then metric Z will improve because…. This keeps feature enhancements & A/B testing focused on improving products through experimentation rather than shipping random variants.

2) Primary metric + guardrails

A primary metric tells you if the change worked. Guardrails tell you if it created unintended harm.

3) Randomization, sample size, and test duration

To make A/B testing reliable, you need enough exposure to detect real differences. Sample size depends on baseline rate, expected lift, and desired confidence.

Don’t ship conclusions faster than your traffic can support. Improving products through experimentation means respecting statistical power and stopping rules.

4) Avoiding contamination and novelty effects

Contamination happens when users see multiple variants (or influence each other). Novelty effects happen when people temporarily behave differently because something is new. Both are manageable with correct unit selection and appropriate test length.

How SHAPE runs feature enhancements & A/B testing

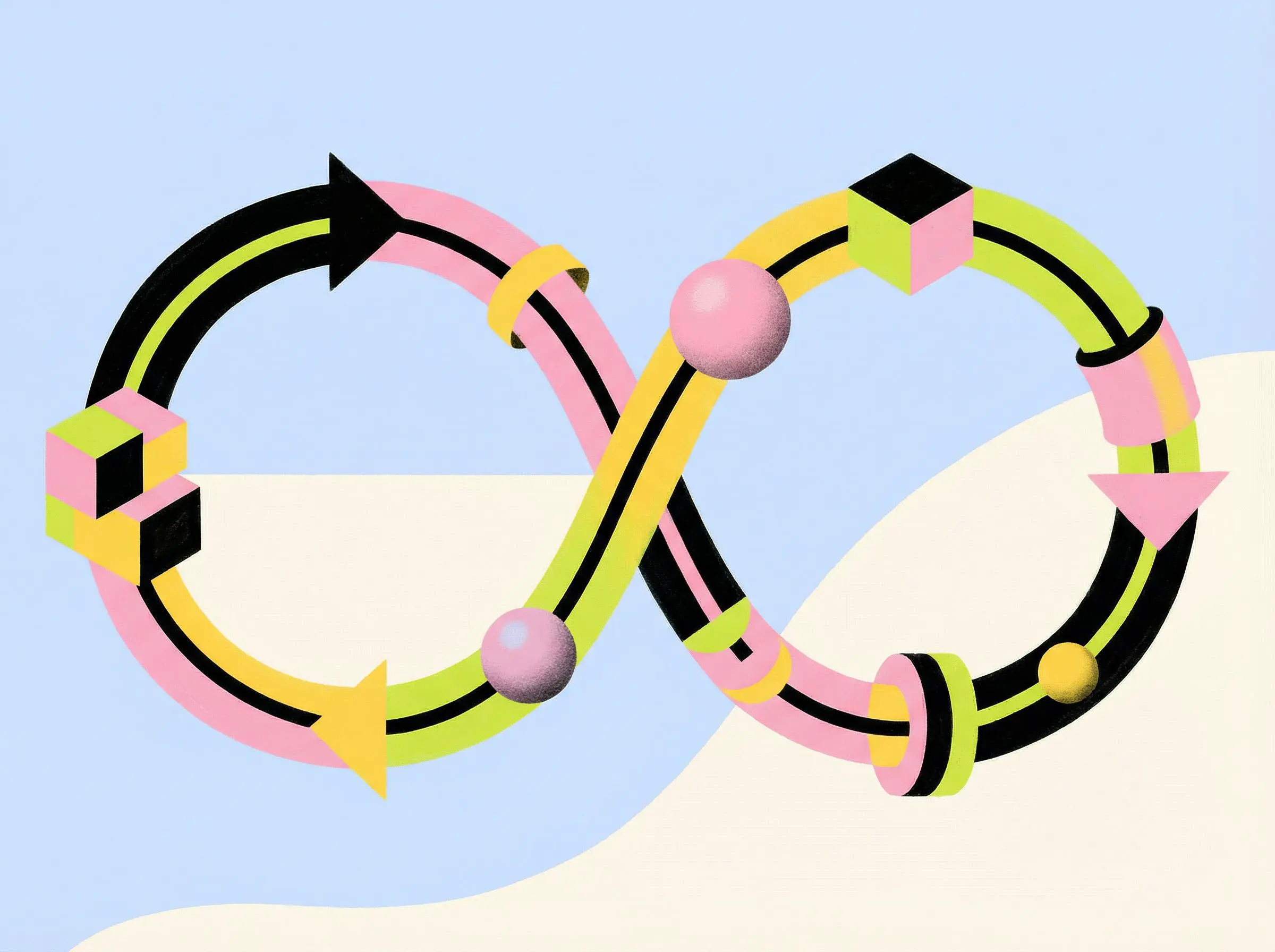

We treat experimentation as a product operating system: decide what to test, ship it safely, measure outcomes, and convert learnings into durable feature enhancements. This is what improving products through experimentation looks like when it’s repeatable.

1) Identify the highest-leverage opportunity

We start with user friction and business impact. We often combine analytics with UX research & usability testing to ensure you’re experimenting on real problems.

2) Define an experiment plan that engineering can ship

3) Ship with quality gates and safe releases

Experimentation code is still production code. We reduce risk by pairing feature enhancements & A/B testing with Manual & automated testing.

4) Analyze results and translate them into next actions

Not every test yields a “win”—and that’s fine. The output is a decision: ship, iterate, or stop. Over time, this is how teams keep improving products through experimentation without roadmap thrash.

Plan your next experiment with SHAPE

Use case explanations

1) Onboarding drop-offs (activation is stuck)

We design feature enhancements & A/B testing around the activation funnel—changing one friction point at a time (copy, steps, defaults, guidance). The goal is improving products through experimentation with measurable lift in completion and time-to-value.

2) Pricing and packaging changes feel risky

Pricing experiments need guardrails (refunds, cancellations, support volume). We test variants with clear segmentation and decision thresholds, then roll out safely.

3) Search, recommendations, or sorting need measurable improvement

We test ranking and UI changes using objective outcomes (click-through, add-to-cart, task completion) while monitoring relevance guardrails. If performance under load is a concern, we often pair with Performance & load testing.

4) Feature adoption is low after launch

We treat adoption as an experiment space: discovery placement, in-product education, default settings, and prompts. Feature enhancements & A/B testing clarify what actually increases usage rather than what “should” work.

5) B2B products with account-level behavior

In B2B, experiments can be distorted by team collaboration and sales-driven onboarding. We use account-level assignment, strict eligibility rules, and cohort analysis to keep improving products through experimentation trustworthy.

Get help running feature enhancements & A/B testing

Step-by-step tutorial: run feature enhancements & A/B testing that leads to real product improvement

This workflow mirrors how SHAPE helps teams improve products through experimentation—from hypothesis to rollout.

Treat every experiment as a reusable pattern—hypothesis → safe rollout → measurement → decision—so feature enhancements compound instead of resetting each sprint.

Who are we?

Shape helps companies build an in-house AI workflows that optimise your business. If you’re looking for efficiency we believe we can help.

Customer testimonials

Our clients love the speed and efficiency we provide.

FAQs

Find answers to your most pressing questions about our services and data ownership.

All generated data is yours. We prioritize your ownership and privacy. You can access and manage it anytime.

Absolutely! Our solutions are designed to integrate seamlessly with your existing software. Regardless of your current setup, we can find a compatible solution.

We provide comprehensive support to ensure a smooth experience. Our team is available for assistance and troubleshooting. We also offer resources to help you maximize our tools.

Yes, customization is a key feature of our platform. You can tailor the nature of your agent to fit your brand's voice and target audience. This flexibility enhances engagement and effectiveness.

We adapt pricing to each company and their needs. Since our solutions consist of smart custom integrations, the end cost heavily depends on the integration tactics.