Bias detection & mitigation

SHAPE’s Bias Detection & Mitigation service helps organizations identify and reduce unfair model behavior using slice-based evaluation, practical fairness metrics, and deployable mitigation strategies. The page outlines governance-ready deliverables, real-world use cases, and a step-by-step playbook to operationalize fairness monitoring in production.

Service page • Responsible AI • Bias detection & mitigation

Bias detection & mitigation is how SHAPE helps teams identify and reduce unfair model behavior in machine learning and AI systems—before harm becomes a customer issue, compliance issue, or brand issue. We measure disparities across groups, locate the drivers (data, features, thresholds, or process), and implement mitigations you can operate in production.

Talk to SHAPE about bias detection & mitigation

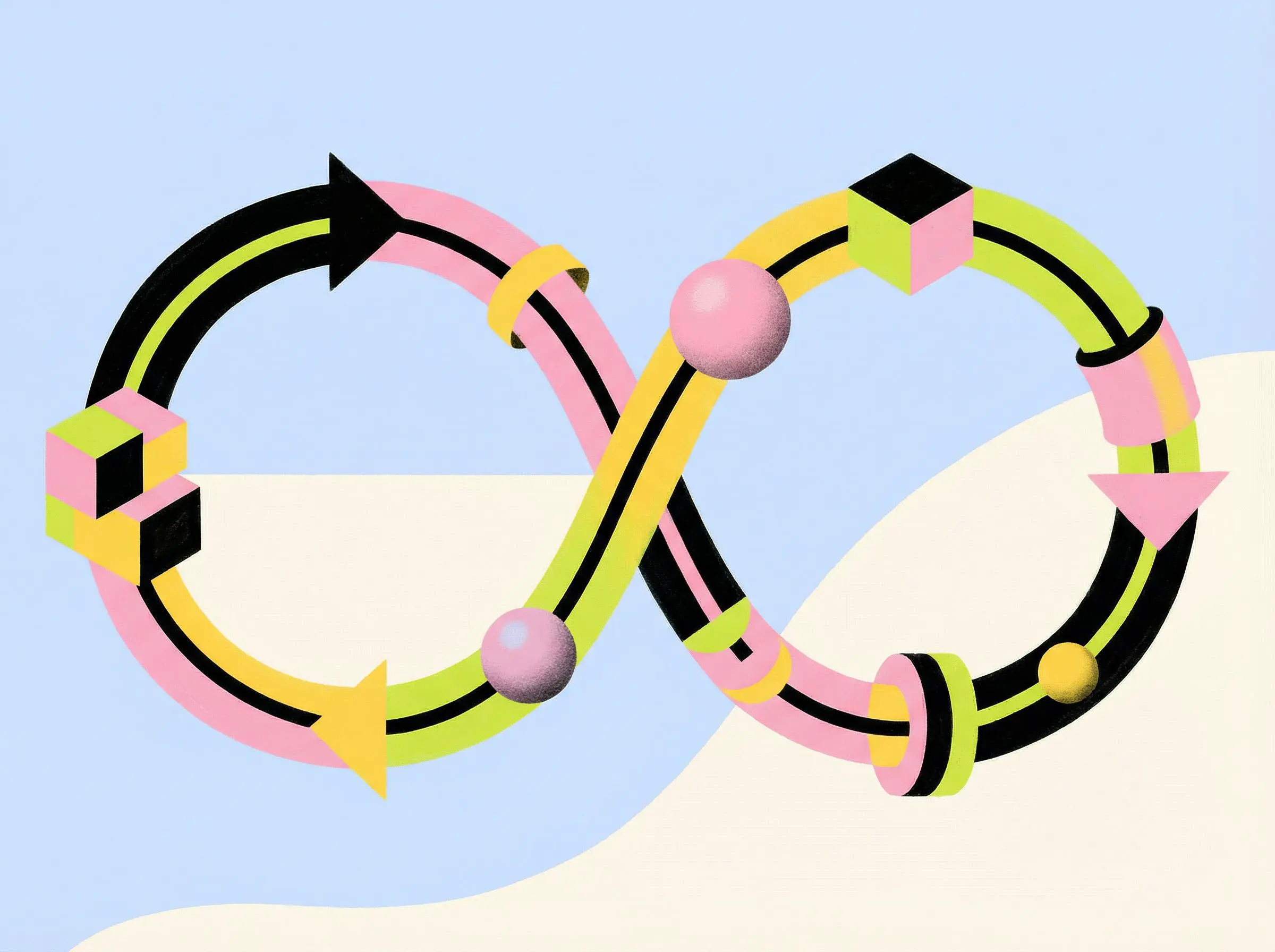

Bias detection & mitigation is a lifecycle practice: measure disparities, reduce unfair model behavior, and monitor continuously.

Table of contents

What is bias detection & mitigation?

Bias detection & mitigation is the practice of measuring whether an AI system produces systematically different outcomes for different groups—and then changing the system to identify and reduce unfair model behavior. In production environments, bias is rarely a single metric; it’s a set of trade-offs that must be defined, tested, and governed.

Bias is context-dependent (and must be defined)

“Fair” is not one universal number. Effective bias detection & mitigation starts with a clear definition of the decision and the impact surface:

If you can’t write down what “unfair model behavior” means for your decision, you can’t detect it—and you can’t mitigate it.

Related services (internal links)

Bias detection & mitigation is strongest when it’s paired with governance, monitoring, and explainability. Teams commonly combine identifying and reducing unfair model behavior with:

Why identifying and reducing unfair model behavior matters

AI systems don’t just produce predictions—they shape access to resources, opportunities, and outcomes. Bias detection & mitigation helps you identify and reduce unfair model behavior so decisions are more consistent, defensible, and trustworthy.

Measurable outcomes of bias detection & mitigation

Common failure modes we prevent

If you ship models into changing environments, identifying and reducing unfair model behavior must continue after launch.

Bias detection & mitigation toolkit (BDT): techniques and outputs

SHAPE applies a practical toolkit approach to bias detection & mitigation: define the fairness target, measure disparities across relevant slices, diagnose drivers, and implement mitigations that you can monitor. This enables consistent identifying and reducing unfair model behavior across models and teams.

1) Data and cohort diagnostics (before model changes)

We start by checking whether data issues are creating downstream unfairness. Typical checks include:

2) Fairness metrics (choose what matches the decision)

Different decisions require different fairness definitions. For bias detection & mitigation, we select metrics that match risk and workflow, such as:

3) Slice-based evaluation (where unfair behavior hides)

We evaluate performance and fairness across meaningful slices, not only a global score:

4) Mitigation strategies (what we actually change)

Once unfair model behavior is detected, mitigation can happen at multiple layers:

/* Bias detection & mitigation operating rule:

If you can’t name the disparity, reproduce it by slice, and show which change reduced it,

you don’t have a mitigation—you have a hope. */

5) Evidence artifacts (so work is defensible)

Bias detection & mitigation should produce audit-ready artifacts such as:

Governance, auditability, and monitoring

Bias detection & mitigation must be operational. SHAPE helps teams implement governance and monitoring so identifying and reducing unfair model behavior is repeatable across releases and measurable in production.

What we monitor (so disparity doesn’t drift back)

How we connect governance to delivery

To keep bias work enforceable, we connect it to production release discipline and evidence retention:

If fairness metrics aren’t monitored after launch, bias detection & mitigation becomes a one-time checkbox—and unfair model behavior returns quietly.

Use case explanations

Below are common scenarios where SHAPE delivers bias detection & mitigation to identify and reduce unfair model behavior while keeping systems operable and audit-ready.

1) Eligibility, approvals, or underwriting decisions need defensibility

We run fairness evaluations across protected and business-relevant cohorts, tune thresholds, and implement audit logs so high-impact decisions are explainable and consistent.

2) Fraud, abuse, or risk models are over-flagging certain segments

We measure false-positive disparity, diagnose feature/behavior drivers, and mitigate using data improvements, calibrated thresholds, and human review for uncertain cases.

3) Hiring, admissions, or triage workflows need bias controls

We help organizations define “fair enough” decision standards, evaluate by slice, and implement mitigations that fit operational constraints—so identifying and reducing unfair model behavior doesn’t break workflow speed.

4) Recommendations or ranking systems create unequal exposure

We measure exposure and outcome disparities across cohorts, adjust ranking objectives and constraints, and monitor for drift as catalogs and user behavior change.

5) You have strong overall metrics—but stakeholders suspect unfairness

We build a slice-first evaluation approach (including Explainable AI where needed) to pinpoint where disparity appears and which interventions actually reduce it.

Start a bias detection & mitigation engagement

Step-by-step tutorial: run bias detection & mitigation end-to-end

This playbook mirrors how SHAPE implements bias detection & mitigation to identify and reduce unfair model behavior from evaluation through production monitoring.

Write the decision the model influences, who is affected, and what “unfair” means in this context (e.g., error-rate disparity, selection-rate disparity, ranking exposure). Document constraints and acceptable trade-offs.

Define relevant groupings (where appropriate and permitted) plus operational slices (region, device, product segment). This becomes the backbone of bias detection & mitigation.

Check coverage gaps, label noise, missingness, and base-rate differences. Many cases of unfair model behavior start here—before the algorithm.

Compute fairness and performance metrics across cohorts. Identify which disparities are statistically and operationally meaningful—and which are noise.

Use explainability and error analysis to locate what drives disparity. If you need deeper model reasoning visibility, connect to Explainable AI.

Select the smallest intervention that reduces unfair model behavior without breaking product goals: rebalance data, improve labels, adjust objectives, or tune decision thresholds.

Re-run the full evaluation suite to confirm disparity decreased (and that performance didn’t degrade in critical slices). Document what remains and how you’ll monitor it.

Store reports, thresholds, model versions, and approvals. For lifecycle traceability, pair with Model governance & lifecycle management.

Set dashboards and alerts for cohort outcomes, drift signals, and override rates. Operationalize with AI pipelines & monitoring so bias detection & mitigation remains an ongoing capability.

Your fastest win is repeatability: one slice framework, one fairness evaluation suite, and one monitoring dashboard pattern reused across every model.

Who are we?

Shape helps companies build an in-house AI workflows that optimise your business. If you’re looking for efficiency we believe we can help.

Customer testimonials

Our clients love the speed and efficiency we provide.

FAQs

Find answers to your most pressing questions about our services and data ownership.

All generated data is yours. We prioritize your ownership and privacy. You can access and manage it anytime.

Absolutely! Our solutions are designed to integrate seamlessly with your existing software. Regardless of your current setup, we can find a compatible solution.

We provide comprehensive support to ensure a smooth experience. Our team is available for assistance and troubleshooting. We also offer resources to help you maximize our tools.

Yes, customization is a key feature of our platform. You can tailor the nature of your agent to fit your brand's voice and target audience. This flexibility enhances engagement and effectiveness.

We adapt pricing to each company and their needs. Since our solutions consist of smart custom integrations, the end cost heavily depends on the integration tactics.